The LRU (Least Recently Used) list is a common data structure.

In simple terms, it arranges each piece of data in a sequence, placing the most recently used data at the front and the least used data at the back.typically implemented using a linked list.

The LRU list has a wide range of applications, and its main purpose is to reduce the operational cost of data retrieval. The specific approach is to place data retrieved from the disk into a cache, where the cached data is ordered in an LRU manner, with frequently used data at the front for easy access.

example

Ranking list(in the game or music chart e.t.c) can apply the LRU concept. Ranked that were popular a few hours ago may still be popular in the next hour, meaning those are likely to be repeatedly accessed and displayed on the page.

By retaining these commonly used words in the cache, the program can read them multiple times easily, reducing the cost of data retrieval.

LRU in InnoDB

InnoDB is MySQL’s default storage engine, and it incorporates many software design concepts worth learning. These designs are detailed and can inspire us to think more deeply and create better software.

As following as Below, I will share how InnoDB how to use LRU list optimizes reduce the operational cost of data retrieval.

LRU in Classic

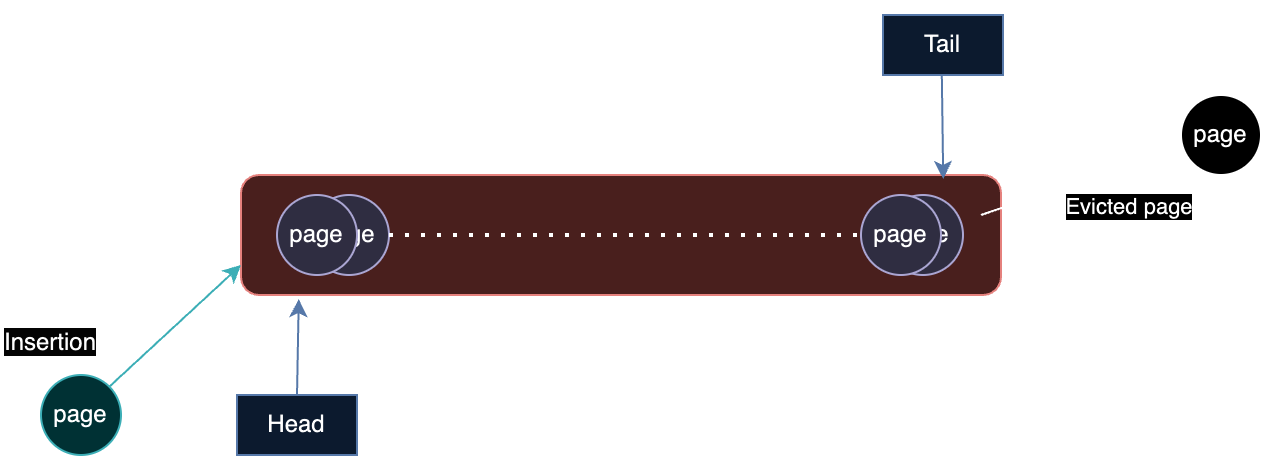

In InnoDB, the smallest unit of data access is called a page. Each node in the linked list stores a page. The cache memory space is called the Buffer Pool, as shown below.

Traditional LRU algorithms define a maximum number of nodes in the linked list. When a data page is read, it is considered most used and inserted at the front of the list.

If the node count exceeds the limit, the last node is evicted. If an existing data page in the list is read, it is removed from its original position and inserted at the front, keeping the front nodes as recently used.

Data pages are likely to be read only once and not accessed again when a full table scan or index scan is triggered.

theseone-time readpages would flood the front part of the linked list, being mistakenly identified asmost used.

cache space is occupied by these infrequently used pages, pushing out the genuinely frequently used pages, which then have to be read from the disk again, thereby defeating the purpose of caching.

LRU Optimized

To address this, InnoDB optimized the LRU algorithm, adjusting the definition of most used based on two key points:

-

Frequency Recognition

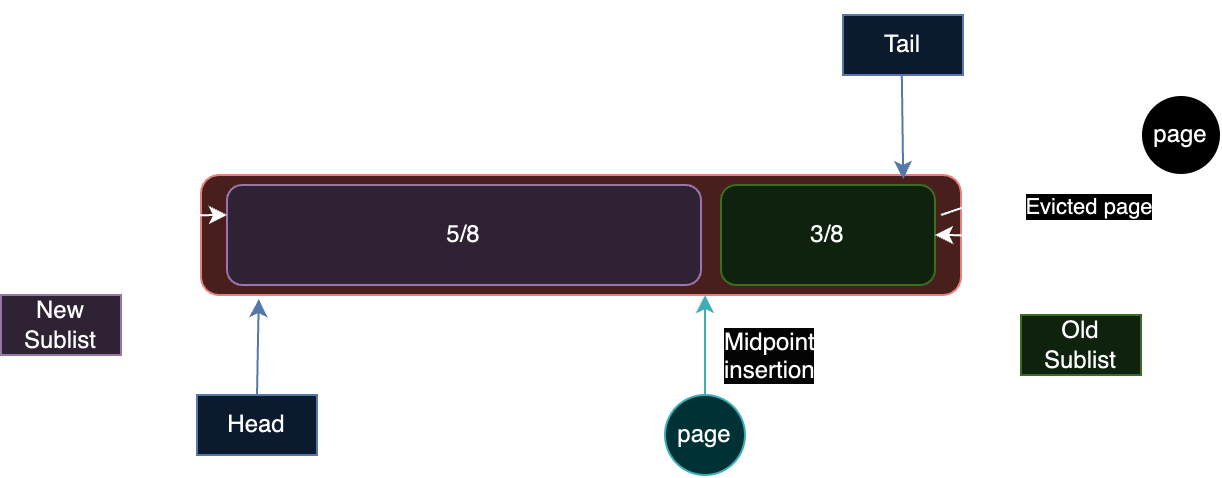

Recently used pages, when read from the disk, are not inserted directly at the front of the list but in the middle.

Only when they are read again from the cache are they consideredmost usedand moved to the front. This insertion method is called theMidpoint Insertion Strategy.A real-life example:

something you want to use is not placed directly on your desk because you might just a whim through it out of interest, not intending to use it thoroughly.The stuff, after being used from the cabinet, is placed in the middle of the desk (middle of the list). Only when you pick it up again to use is it moved to the front of the desk (front of the list).

as shown above, the

New Sub Listand theOld Sub List, with a boundary at approximately the last3/8 of the list. Data read from the disk is first placed at the head of the Old Sub List (3/8 position). When read again in the Buffer Pool, it is inserted at the head of the New Sub List.

When nodes exceed the limit, the last node in the Old Sub List is evicted. The parameterinnodb_old_blocks_pctsets the proportion of the Old Sub List, with a default value of 37% in MySQL 8.0, roughly 3/8. -

Time Recognition

InnoDB provides theinnodb_old_blocks_timeparameter, setting the minimum time a page must stay in theOld SubList before moving to theNew SubList.This parameter is in milliseconds, with a default value of 1000 in MySQL 8.0 witch prevents frequent list refreshing.

Through these two point as mentioned above, InnoDB ensures that truly necessary cached content is placed at the front, effectively enhancing read performance.

Summary

The hit rate is a critical consideration when designing caches.

InnoDB’s design philosophy, we see that it improves the LRU algorithm by adjusting frequency and time, redefining what most used data means, thereby enhancing the cache hit rate.